Abstract

Neural engineering is an emerging interdisciplinary field that utilizes engineering techniques to discover the function and manipulate the behavior of the human brain. Current research in neural engineering focuses on the development of treatments for neurological disorders that deplete the health of the nervous system and explores how the nervous system operates in both health and disease. Neural engineering has huge potential to relieve suffering in those who have lost functionality in their nervous systems, but the further development of this technology raises a number of ethical questions about identity, privacy, responsibility, and justice. These questions must be thoroughly addressed in order to guarantee the most responsible future development and use of neural engineering technologies.

Introduction

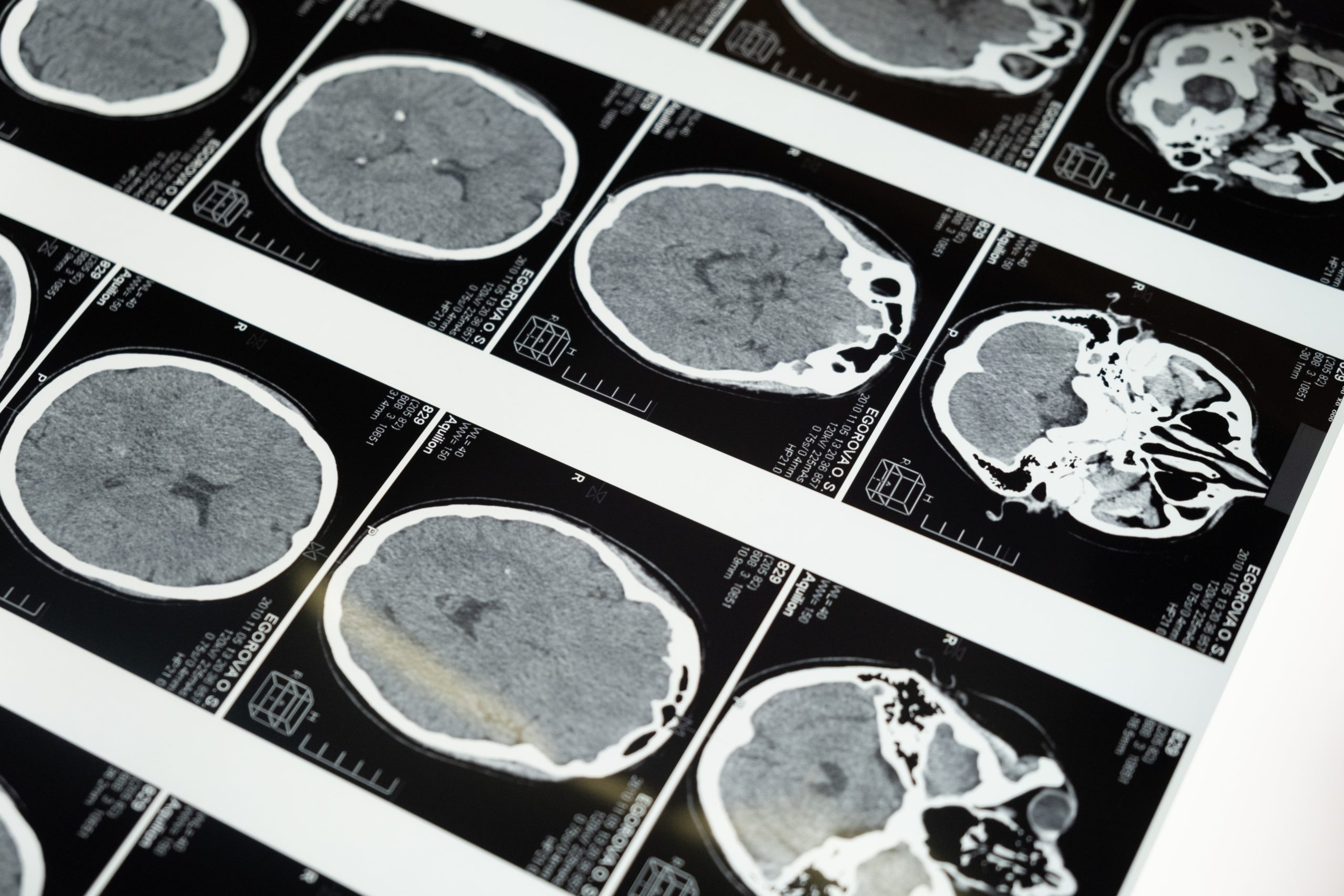

Neural engineering is a discipline in which engineering techniques are applied to understand, replace, repair, or enhance the brain. It’s a very new field—the first international neural engineering conference was held in Capri, Italy in 2003, and the field’s first academic journal, The Journal of Neural Engineering,was established the year after—but already, neural engineering has generated a great amount of excitement over the development of deep brain stimulators (DBS) and brain-computer interfaces (BCIs) to treat patients with neurological disorders [1].

Advancements within neural engineering have paved the way for a range of spectacular technology. One of these technologies is DBS, mentioned above, which involves the placement of a neurostimulator in the brain to send electrical impulses through implanted electrodes [2]. Researchers at the University of Washington’s BioRobotics Lab, for instance, have focused on closed-loop DBS in the hopes of managing motor and medical conditions such as tremors, Parkinson’s disease, and dystonia [2]. The goal of this specific DBS technology is to deliver a constant stream of electrical stimulation to an implantation site deep within the brain that will assist in motor control for patients who have difficulty performing everyday tasks. DBS technology has also been extended to the treatment of mental health disorders such as chronic depression, which doctors may accomplish by implanting tiny electrodes in the brain that can regulate a person’s mood. However, in standard DBS devices, since electrical stimulation is always on, batteries deplete quickly, which means that routine invasive surgeries are necessary for replacement.

Another exciting advancement is BCI technology. BCIs are “computational systems that form a communication pathway between the central nervous system and some output, be it a device or feedback to the user” [3]. Again at the University of Washington, researchers are working to create BCI-triggered DBS that would allow patients to control the DBS’s level of stimulation, turning it on or off at their convenience [2]. This would eliminate the need for frequent replacements of a patient’s DBS, as battery life can be prolonged if the electrical stimulation is not at a constant stream. Some BCI-triggered DBS devices are also used to treat mental health disorders by receiving algorithmic feedback from certain areas of the brain and using the feedback to apply an electrical stimulation to elevate the patient’s mood, while others can enable paralyzed individuals to perform motor functions with a prosthetic arm by just thinking about completing the physical action [2]. Simply put, BCIs translate the user’s brain signals to enable a wide range of motor control, communication, and autonomic function.

However, the current and future applications of neural engineering technologies pose a variety of ethical concerns. Can a device impact the way an individual thinks of themselves or how others think of them [3]? How accessible do neural devices make the user’s experience to others? If a device stimulates an individual’s brain while they decide upon an action, are they still the author of the action? Should individuals be held accountable for every action in which the device plays a role? Such questions are fundamental to the application of neural engineering, as the functions of the brain are so intimately linked with an individual’s and culture’s understanding of identity, privacy, responsibility, and justice. Therefore, all of them must be addressed in order to ensure the ethical development and implementation of neural technology. We will discuss these questions through a framework that has been developed through the review of several sources, including work from Klein et. al. as well as Yuste and Goering [4, 6].

Identity

Our self-understanding is often influenced by the relationships we have with technology. Humans have come to rely heavily upon tools such as laptops, GPSs, and smart phones (hence the common use of phrases such as “I’m more of a Mac person” or “I can’t live without my phone”) [4]. Of course, the effects of biomedical technology prove much more significant than reliance on one’s laptop to complete a class assignment. When technology not only aids us but also replaces body parts or functions, as is the case with many of the applications of neural engineering, its influence is likely to be greater than any external tool.

There has been evidence of such influence in the use of other assistive devices. For instance, a 2009 study found that people who are blind sometimes perceive their canes to be a part of their perceptual systems [5]. Canes are utilized as sensory substitution devices because they provide blind individuals access to features of the world that they would not otherwise be able to experience. Because a blind person who uses a cane experiences stimulation at the end of their cane rather than in their hands, the cane is crucial to their interactions with their environments. Thus, the researchers of this study found that “sensory substitution in terms of mind-enhancing tools unveils it as a thoroughly transforming perceptual experience” [5]. Similarly, individuals who have undergone deep-brain stimulation through electrodes in the brain have reported feeling “an altered sense of agency and identity” [6]. In a 2016 study, a man who used a brain stimulator to treat his depression reported that he questioned whether the way he was interacting with others was due to the device, his depression, or something deeper about himself. He noted that his stimulator “blurs to the point where I’m not sure…frankly, who I am” [6]. Determining a response to a situation has generally been thought of as something decided solely by one’s own mind, but if we alter those responses through “smart” implants, we risk undermining a person’s capacity for self-expression. If BCIs enable immediate translation between a thought and an action, people may eventually find themselves behaving in ways that they struggle to recognize as their own.

When technology functions so well that it becomes an essential part of our lives, we can say that it has become a part of us, transcending the status of a mere tool. The experience of coming to identify with a neural technology is highly complex, because unlike external devices, such as canes, neural devices are implanted in the brain [4]. This can create a more drastic effect due to the way a person’s brain function is so intimately connected to their understanding of their own identity. Our brains are who we are, which is why other implantable devices, such as cardiac pacemakers, have not had so radical an impact on the identities of the people using them. A device that assists in the beating of someone’s heart will be perceived very differently from a device that can impact their thoughts and emotions, because one device’s control is less obviously linked to the central source of identity [4].

Fundamentally, neural technology involves direct interactions between the brain and machines. Because the brain is so incredibly central to our understanding of what it means to be human, anything that manipulates or replaces its function could have a massive impact on how an individual perceives themselves. Blurring an individual’s sense of identity brings up many ethical questions regarding the intentions of each of their actions. For instance, a patient with DBS technology could experience an electrical stimulation that causes them to say something that they didn’t mean to. Should this speech be considered their own, or is it more appropriate to attribute it to their neural device? Additionally, to what extent can we separate the individual from their neural device if it can affect their actions? Without a strong sense of identity, individuals with implanted neural devices may become uncertain about the motivations of their actions. As creators of neural technology, engineers must be extremely mindful of these potentially massive impacts on an individual’s identity.

Privacy

What happens within our minds has always been thought of as private. Criminal jurisprudence has upheld that individuals cannot be punished for their thoughts, and it is widely accepted that it is completely up to each person’s discretion whether or not they want to share their thoughts and emotions with others [7].

We are all likely familiar with how our data trails can supply others with our personal information—whenever we frequent commercial websites, algorithms (“cookies”) are utilized to target advertisements based on our search histories. However, it’s when technology can “read” the mind of someone who isn’t actively consenting that these long-venerated notions of privacy are challenged [2]. BCIs are capable of the direct extraction of highly sensitive information from the brain, including truthfulness, psychological traits, mental states, and attitudes toward other people, all types of information we might be hesitant to share openly with others. Currently, language and non-verbal communication act as the middlemen for understanding the contents of another person’s mind, but they can obfuscate heavily, and we can choose to keep certain personal thoughts private. However, as neural technology continues to develop, it is very likely that there will be an increased capacity to directly observe others’ minds [8]. An individual’s thoughts could eventually be accessed via a database, leading to a wide range of threats to their privacy.

Furthermore, the neural technology used to control and monitor complex systems within the brain is becoming increasingly complex. Due to these innovations, many neural technologies are integrated with wireless technologies (similar to that of radio-frequency identification systems in smart phones) that are vulnerable to attack from hackers [6]. Recent studies have demonstrated the potential for such vulnerability in BCIs, which can be exploited by cybercriminals to illegally access highly sensitive neural information to track or even manipulate an individual’s mental experience [6, 9].

The ideal situation would be one in which engineers completely eliminate the possibility of hacking at the start, but securing neural devices is much more complex than simply installing anti-malware software on a computer. As Tamara Denning, a professor of computing at the University of Utah, says, “instead of protecting…someone’s computer, we are protecting a human’s ability to think and enjoy good health” [4]. Unfortunately, there is always going to be some risk to a patient’s privacy when their neural data is accessible from an external source. This does not mean that engineers should not take all relevant security precautions to protect users’ privacy and prevent the hacking of neural devices, though. The right to privacy, as part of a common ethical framework called the rights approach, makes it essential that citizens have the ability to keep their neural data private. So, despite the inevitable threats, engineers must mitigate these risks as much as possible to ensure that they are doing everything they can to protect patients’ right to privacy.

According to bioethicists, as part of that pursuit of privacy, engineers must create a default of opting out instead of sharing [6]. This would mean that sharing neural data would be treated the same way that organ and tissue donation are in most countries. Individuals would be informed of the risks that neural technology poses to their privacy and would need to explicitly opt in to sharing neural data. This creates a much more secure process that clearly states exactly who will have access the data and for how long [6]. Unfortunately, current BCI technology operates through the collection and storage of user data, so opting in means consenting to a device that has the potential to diminish privacy. However, “BCIs are most likely used only as a last resort treatment option for both motor control and neurostimulation,” so more often than not, individuals will be more concerned with the life-saving qualities of these devices than potential security threats [4]. If an individual chooses to opt out, engineers can refer them to traditional DBS systems, which are less convenient, but would “avert at least some of the privacy concerns” [4]. These sorts of considerations are the kind necessary to protect the rights of individuals with neural devices.

Responsibility

Inextricably linked to the ethical questions of identity and privacy are those of responsibility. If an individual is uncertain about their own identity, should they be held responsible for their actions and the consequences of those actions? Taking responsibility for our own actions and holding others accountable are hallmarks of living morally. However, neural devices can complicate traditional notions of responsibility.

Take, for instance, the case of a patient who utilizes a robotic arm prosthetic that is controlled by a BCI system. Imagine the patient is driving down her street when she suddenly swerves her car into a neighbor’s fence [4]. If the patient claims that she did not intend to turn the car and that the movement was solely due to the prosthetic, she still might be held responsible for choosing to have a neural device in the first place. This reasoning is very much based on a consequentialist ethical framework, under which the ethicality of an action is judged only by its consequences, as no matter what the intention was, the result negatively affected another person (or in this case, their property). The patient could also be held responsible for failing to adequately train her arm to work with the prosthetic, which may have increased the risk of harm to herself and others. However, she could be pardoned from the aforementioned responsibilities if the device was poorly designed, hacked, or made with faulty software that caused it to malfunction.

Through the justice and fairness ethical frameworks, individuals with neural devices may be given special considerations by others because they have an expressed need that requires different treatment for similar actions performed by people who do not need neural devices. This is because individuals with disabilities can come to rely on neural technology to secure social and other goods [4]. In these frameworks, an individual whose device malfunctions should not be held responsible for any damages caused by the technology, and engineers should be held liable for any faulty technology that they produce, as extensive quality checks should be done before releasing this life-saving technology for public use. However, if an individual fails to properly train and acclimate themselves to their functional neural devices, they must be held responsible for their actions.

Justice

As is the case with every health-related technology, neural devices raise ethical concerns about justice with regards to the distribution of harms and benefits and require the inclusion of perspectives from all who will be affected. When scientific or technological decisions are based upon a narrow set of systemic or social concepts, the resulting technology can privilege certain groups but harm others [6].

Like with most cutting-edge biomedical technology, the benefits of neural technology are more likely to be experienced by people with a high socioeconomic status. A 2015 study found that all interviewed patients, most of whom had already experienced difficulties in receiving sufficient financial support for very technical devices, stressed the need to ensure fair access to BCI technology [11]. Studies like this suggest that neural technology, like many other types of high-tech medical devices, is more likely to be available to those who can afford its full price, further widening disparities between people of differing socioeconomic backgrounds. From a justice and fairness ethical standpoint, this is not appropriate, as socioeconomic background should not be a prerequisite for obtaining the best possible technology to ensure a good quality of life. All individuals who express a need for neural technology to improve their quality of life should have equal access to it.

Furthermore, it is important to note that groups who are differently socially positioned, such as disabled individuals who are the intended beneficiaries of neural technology, have a right to have their perspectives thoroughly considered. The concerns of those who need this technology the most must be addressed throughout the development process to ensure that the end products are as inclusive as they can be, as failing to take such concerns into account can lead to feelings of alienation. For instance, when Apple first released its HealthKit app in 2014, it boasted its ability to record a great variety of statistics, including blood pressure, daily steps, respiratory rates, and blood-alcohol levels. However, it failed to consider one of the most basic biological processes: menstrual cycles [12]. The result was that many individuals who menstruated felt that this product was not for them because it did not address all their medical needs. Too often, the input of marginalized groups has been ignored, but this is not an ethical way to address problems that disproportionately affect people of minority backgrounds. The individuals who will be most affected by neural technology have a right contribute their thoughts to the design of devices.

Conclusion

Balancing moral, ethical, and technical considerations is something that all engineers must handle throughout the development of life-changing devices, and this is especially true when it comes to something as intimate as neural technology. The function of the brain is so closely connected to an individual’s understanding of identity, responsibility, privacy, and justice, and neural technology can change how individuals perceive themselves, how they approach their moral responsibility to answer for the consequences of their actions, and how they define privacy. Additionally, considering that neural engineering technologies so strongly influence an individual’s ability to function, it is essential that engineers address ethical questions of justice and fairness that determine who has access to these devices, as well as whose voices must be included in the decision-making process.

Beyond ensuring that they are upholding ethical principles during the design process, engineers have a responsibility to ensure that all decisions made by users of neural technology are made with informed consent. They must warn users of the effects that neural technology can have on their identity and privacy, as well as the fact that they can be held responsible for actions associated with their neural devices. The basic difference between consent and informed consent is patients’ knowledge of what a decision means; informed consent requires that the user understands all of the advantages and disadvantages of neural technology before choosing to use it [10]. In essence, engineers cannot seek to implement life-changing technology without thoroughly informing the individuals who will be directly impacted by it.

The ethical questions posed throughout this paper need to be addressed now in order to inform engineers’ decisions during research and development and should eventually be further fleshed out during implementation. Without careful consideration of the ethical implications of neural technology, it would be highly immoral to continue its development.

By Sabrina Sy, Viterbi School of Engineering, University of Southern California

About the Author

At the time of writing this paper, Sabrina was a second-year biomedical engineering student from Monterey Park, a suburb in the Los Angeles area. In the future, she hopes to create medical technology that will address the health needs of traditionally marginalized groups. When she’s not working on MATLAB projects and physics problem sets, you can find Sabrina spending way too much time reading Wikipedia articles or surfing at Sunset Beach.

References

[1] B. Gordijn and A. M. Buyx, “Neural engineering: The ethical challenges ahead,” in Scientific and Philosophical Perspectives in Neuroethics; Scientific and Philosophical Perspectives in Neuroethics, J. J. Giordano and B. Gordijn, Eds. 2010, Available: https://www.researchgate.net/publication/289301325_Neural_engineering_The_ethical_challenges_ahead.

[2] A. Ansari. “Ethics as a cornerstone of neural engineering research.” Center for Neurotechnology: a National Science Foundation Engineering Research Center. http://www.csne-erc.org/engage-enable/post/ethics-cornerstone-neural-engineering-research (accessed Oct. 11, 2020).

[3] L. S. Sullivan et al, “Keeping Disability in Mind: A Case Study in Implantable Brain–Computer Interface Research,” Sci. Eng. Ethics, vol. 24, (2), pp. 479-504, 2018. Available: https://pubmed.ncbi.nlm.nih.gov/28643185/.

[4] E. Klein et al, “Engineering the Brain: Ethical Issues and the Introduction of Neural Devices,” Hastings Cent. Rep., vol. 45, (6), pp. 26, 2015. Available: https://pubmed.ncbi.nlm.nih.gov/26556144/.

[5] A. Malika and E. Myin, “Perception with compensatory devices: from sensory substitution to sensorimotor extension,” Cognitive science, vol. 33, no. 6, pp. 1036-1058, May 2009, doi:10.1111/j.1551-6709.2009.01040.x

[6] R. Yuste and S. Goering, “Four ethical priorities for neurotechnologies and AI,” Nature, vol. 551, (7679), pp. 159-163, 2017. Available: https://www.nature.com/articles/551159a.

[7] G. S. Mendlow, “Why Is It Wrong To Punish Thought?” Yale L. J., vol. 127, no. 8, pp. 2342-2386, 2018

[8] S. Burwell, M. Sample, and E. Racine, “Ethical aspects of brain computer interfaces: a scoping review,” BMC Med Ethics, vol. 18, no. 60, Nov. 2017, doi: 10.1186/s12910-017-0220-y

[9] M. Ienca and P. Haselager, “Hacking the brain: brain–computer interfacing technology and the ethics of neurosecurity,” Ethics and Information Technology, vol. 18, Apr. 2016, doi: 10.1007/s10676-016-9398-9

[10] C. P. Selinger, “The right to consent: Is it absolute?” British Journal of Medical Practitioners, vol. 2, no. 2, pp. 50-54, June 2009.

[11] S. Schicktanz, T. Amelung, and J.W. Rieger, “Qualitative assessment of patients’ attitudes and expectations toward BCIs and implications for future technology development,” Frontiers in systems neuroscience, vol. 9, no. 64, Apr. 2015, doi: 10.3389/fnsys.2015.00064

[12] Peña, Mike. “Ignoring Diversity Hurts Tech Products and Ventures.” eCorner. https://ecorner.stanford.edu/articles/ignoring-diversity-hurts-tech-products-and-ventures/ (accessed Nov. 15, 2020).

Links for Further Reading

Brain-Computer Interfaces in Medicine